Mind Lab Toolkit (MinT)

Building the Infrastructure for Experiential Intelligence

MinT (Mind Lab Toolkit) is an RL infrastructure that helps agents and models learn from real experience. It abstracts away compute scheduling, distributed rollout, and training orchestration so teams can iterate learning loops inside real tasks with real feedback under real product constraints.

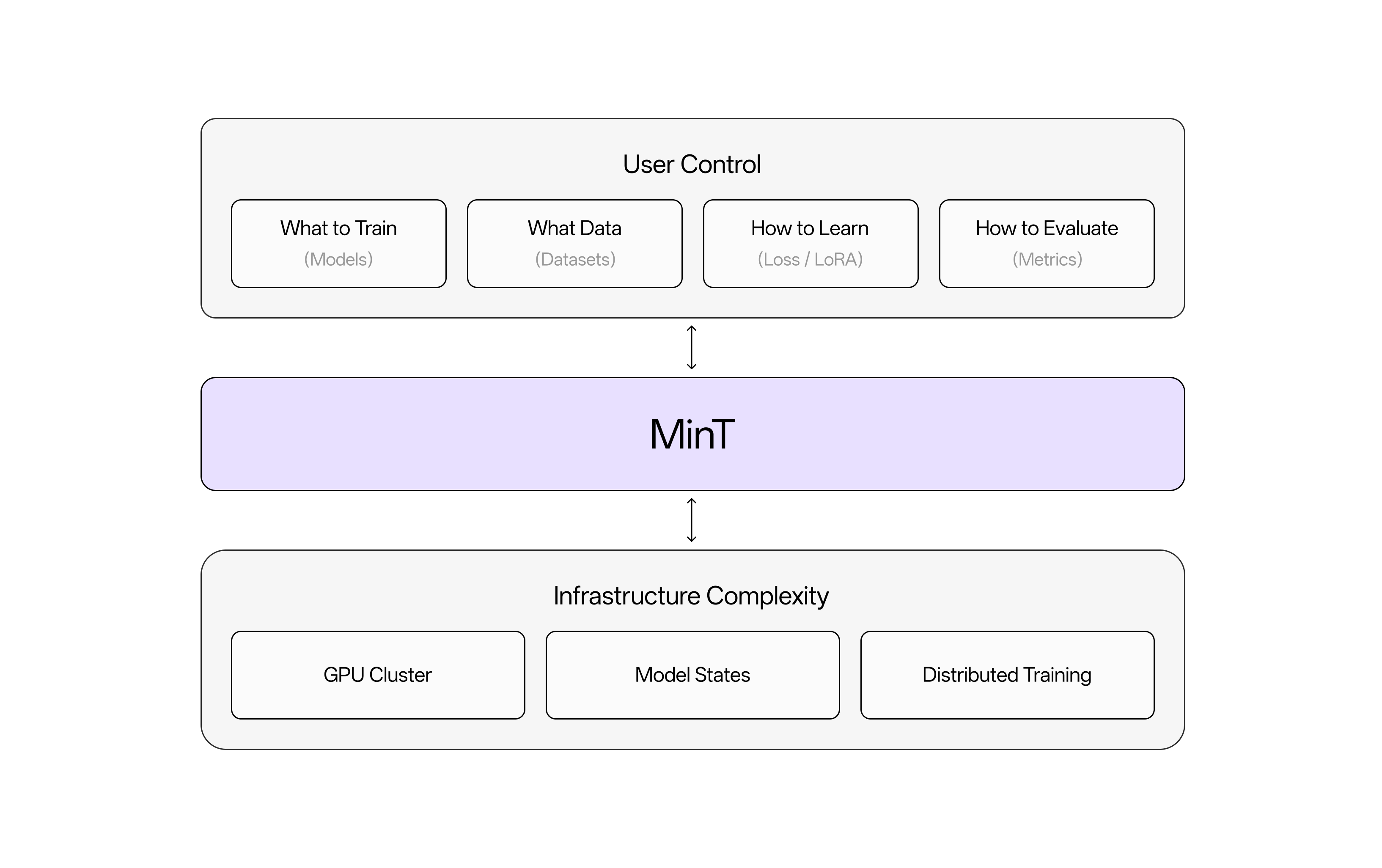

MinT provides a unified and reproducible way to run reinforcement learning across multiple models and tasks, with a strong focus on making LoRA RL simple, stable, and efficient for both mainstream and frontier scale models. You define what to train, what data to learn from, how to optimize, and how to evaluate, and MinT handles the rest.

What is MinT?

MinT focuses on the engineering and algorithmic realization of Reinforcement Learning (RL) across multiple models and tasks. We place specific emphasis on making LoRA RL (Low-Rank Adaptation Reinforcement Learning) simple, stable, and efficient for both mainstream and frontier models.

MinT abstracts away infrastructure complexity—GPU clusters, model states, distributed training—so you can focus on what matters: choosing models, preparing data, defining learning objectives, and evaluating results.

Key Features

- Model Support: Qwen3-0.6B, Qwen3-4B-Instruct-2507, Qwen3-30B-A3B-Instruct-2507, Qwen3-235B-A22B-Instruct-2507—kept in sync with server availability

- Algorithm Support: Robust training pipeline centered on RLHF/GRPO and general policy optimization

- Frictionless Migration: Initial API compatibility with ThinkingMachines Tinker, designed as a familiar drop-in upgrade

- Scaled Agentic RL: Capture and utilize experience at scale across wider task distributions, longer interaction horizons, and richer environmental constraints

Core Capabilities

- LoRA fine-tuning for efficient training of large models

- Distributed data collection and rolling training

- Weight management and model publishing

- Online evaluation on standard CPU clusters

Who We Build For

For Builders: Focus on understanding your problem and designing mechanisms—let MinT handle the infrastructure. We open up the workflows, evaluation schemes, and training infrastructure we use in real business scenarios.

For Researchers: Reduce engineering friction in RL research with standardized logging, reproducible evaluation, portable workflows, and interpretable training data lineage.

Core API Functions

forward_backward: Computes and accumulates gradientsoptim_step: Updates model parameterssample: Generates outputs from trained models- Weight/optimizer state persistence functions

Values

We insist on real experience as the primary constraint. We insist on transparent engineering. We insist on delivering reusable workflows to the community.